28. Color Classify

Color Classify

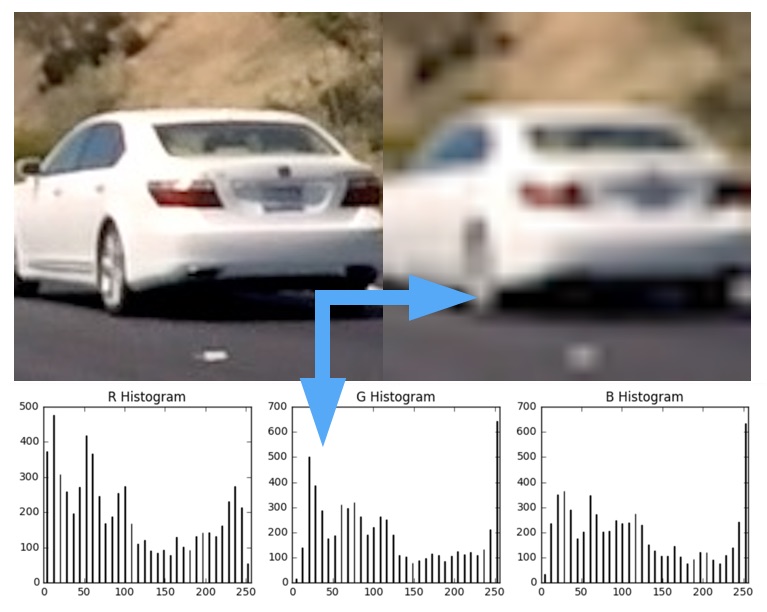

Now we'll try training a classifier on our dataset. First, we'll see how well it does just using spatially binned color and color histograms.

To do this, we'll use the functions you defined in previous exercises, namely,

bin_spatial()

,

color_hist()

, and

extract_features()

. We'll then read in our car and non-car images, and extract the color features for each.

All that remains is to define a labels vector, shuffle and split the data into training and testing sets, scale the feature vectors to zero mean and unit variance, and finally, define a classifier and train it!

Our labels vector

y

in this case will just be a binary vector indicating whether each feature vector in our dataset corresponds to a car or non-car (1's for cars, 0's for non-cars). Given lists of car and non-car features (the output of

extract_features()

) we can define a labels vector like this:

import numpy as np

# Define a labels vector based on features lists

y = np.hstack((np.ones(len(car_features)),

np.zeros(len(notcar_features))))Next, we'll stack our feature vectors like before:

# Create an array stack of feature vectors

X = np.vstack((car_features, notcar_features)).astype(np.float64)

And now we're ready to shuffle and split the data into training and testing sets. To do this we'll use the Scikit-Learn

train_test_split()

function, but it's worth noting that recently, this function moved from the

sklearn.cross_validation

package (in

sklearn

version <=0.17) to the

sklearn.model_selection

package (in

sklearn

version >=0.18).

In the quiz editor we're still running

sklearn

v0.17, so we'll import it like this:

from sklearn.cross_validation import train_test_split

# But, if you are using scikit-learn >= 0.18 then use this:

# from sklearn.model_selection import train_test_split

train_test_split()

performs both the shuffle and split of the data and you'll call it like this (here choosing to initialize the shuffle with a different random state each time):

# Split up data into randomized training and test sets

rand_state = np.random.randint(0, 100)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=rand_state)Now that we have split into training and test sets, we can scale our features. It's important to do the scaling after splitting the data, otherwise you are allowing the scaler to peer into your test data!

from sklearn.preprocessing import StandardScaler

# Fit a per-column scaler only on the training data

X_scaler = StandardScaler().fit(X_train)

# Apply the scaler to both X_train and X_test

scaled_X_train = X_scaler.transform(X_train)

scaled_X_test = X_scaler.transform(X_test)#### Warning: when dealing with image data that was extracted from video, you may be dealing with sequences of images where your target object (vehicles in this case) appear almost identical in a whole series of images. In such a case, even a randomized train-test split will be subject to overfitting because images in the training set may be nearly identical to images in the test set. For the subset of images used in the next several quizzes, this is not a problem, but to optimize your classifier for the project, you may need to worry about time-series of images!

Now, you're ready to define and train a classifier! Here we'll try a Linear Support Vector Machine. To define and train your classifier it takes just a few lines of code:

from sklearn.svm import LinearSVC

# Use a linear SVC (support vector classifier)

svc = LinearSVC()

# Train the SVC

svc.fit(scaled_X_train, y_train)Then you can check the accuracy of your classifier on the test dataset like this:

print('Test Accuracy of SVC = ', svc.score(scaled_X_test, y_test))Or you can make predictions on a subset of the test data and compare directly with ground truth:

print('My SVC predicts: ', svc.predict(scaled_X_test[0:10].reshape(1, -1)))

print('For labels: ', y_test[0:10])

Play with the parameter values

spatial

and

histbin

in the exercise below to see how the classifier accuracy and training time vary with the feature vector input.

Start Quiz: